Our paper at EMNLP 2023, “Learning Interpretable Style Embeddings via Prompting LLMs” used GPT-3 to generate a synthetic dataset used for training interpretable text style embeddings, where each dimension of the embedding vector has a meaningful interpretation to a human.

Interpretability, Superposition, and Entangled Representations

Typically, embeddings represent text with a $d$-dimensional vector. For example, word2vec embeddings used $d = 300$ or had a $300$-dimensional vector represent the similarity of text in vector space. So the words “tiger” and “zebra” might be considered similar because they are both “animals” and because they are both “striped”.

The embeddings, however, are not easily interpretable. None of the $300$ dimensions will directly correspond to the “animal”-ness or “striped”-ness properties of the text. Instead, the properties present in a text will influence the values of all $d$ dimensions. This is sometimes called superposition, a phenomenon where by neural networks tend to learn representations that “entangle” feature representations across all $d$ dimensions vs. learning “disentangled” representations where each dimension of the embedding vector may 1:1 correspond to a directly interpretable human feature such as “color”, “size”, “animal”-ness, etc.

Training Interpretable Text Style Embeddings

In our paper, we train “text style embeddings”, or embeddings that map two texts closely in vector space if their writing style is similar. These kinds of embeddings can be useful in downstream tasks such as authorship attribution. In these downstream tasks interpretability is extremely desirable, however, embeddings are not typically directly interpretable due to the entangled representations neural networks tend to learn.

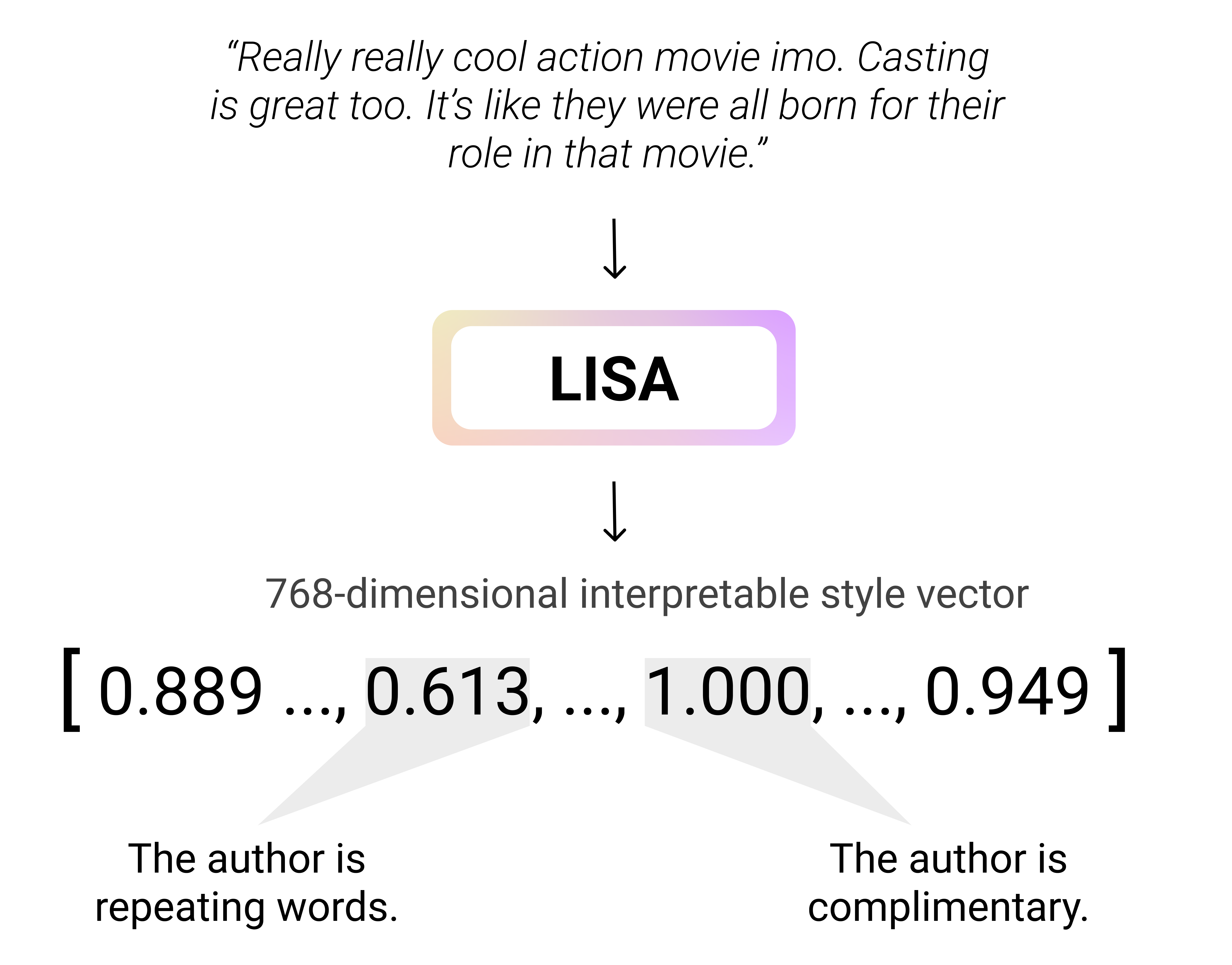

In our paper, we train $768$-dimensional text style embeddings (we call LISA embeddings) where each dimension directly represents a stylistic feature of the text and can be interpreted by a human:

Using GPT-3 to Train Interpretable Embeddings

One obstacle to training an interpretable embedding model is getting labeled data for many texts. If the vector has $d = 768$ dimensions, then each text in your dataset of texts $T$ must be labeled with $768$ properties, that results in $d * T$ total labels required. This can be an expensive and burdensome amount of labeled data to acquire via human annotators.

We overcome this obstacle by using GPT-3 to annotate texts and their stylistic properties to create a synthetic labeled dataset. Our method of using a synthetic dataset generated by an LLM is generally applicable to training other kinds of interpretable text embeddings. See our paper for in-depth details on our methodology.

Dataset and Models

The datasets and models from the paper can be found here.

Paper and Citation

This paper was joint work with Delip Rao, Ansh Kothary, Kathleen McKeown, Chris Callison-Burch.

You can find the paper here and cite the paper with:

@inproceedings{patel-etal-2023-learning,

title = "Learning Interpretable Style Embeddings via Prompting {LLM}s",

author = "Patel, Ajay and Rao, Delip and Kothary, Ansh and McKeown, Kathleen and Callison-Burch, Chris",

editor = "Bouamor, Houda and Pino, Juan and Bali, Kalika",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2023",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.findings-emnlp.1020",

doi = "10.18653/v1/2023.findings-emnlp.1020",

pages = "15270--15290",

abstract = "Style representation learning builds content-independent representations of author style in text. To date, no large dataset of texts with stylometric annotations on a wide range of style dimensions has been compiled, perhaps because the linguistic expertise to perform such annotation would be prohibitively expensive. Therefore, current style representation approaches make use of unsupervised neural methods to disentangle style from content to create style vectors. These approaches, however, result in uninterpretable representations, complicating their usage in downstream applications like authorship attribution where auditing and explainability is critical. In this work, we use prompting to perform stylometry on a large number of texts to generate a synthetic stylometry dataset. We use this synthetic data to then train human-interpretable style representations we call LISA embeddings. We release our synthetic dataset (StyleGenome) and our interpretable style embedding model (LISA) as resources.",

}