Our paper at ICLR 2023, “Bidirectional Language Models Are Also Few-shot Learners” surprisingly discovers that older models like T5, that predate GPT-3, were promptable and could perform in-context learning.

Bidirectional Models (T5) vs. Unidirectional Models (GPT)

Older models like T5 are trained on a denoising objective (similar to the BERT masked language modeling objective) where they learn to predict a masked word in a sentence. GPT-style models are trained on a more classic causal language modeling objective of predicting the next word in a sentence (next-token prediction).

Empirically, the bidirectional T5-style denoising objective has been shown to learn stronger language representations during self-supervised pre-training than the GPT-style objective. This is, in fact, a weakness of the GPT-3 model that the authors state:

GPT-3 has several structural and algorithmic limitations … our experiments do not include any bidirectional architectures … trying to make bidirectional models work with few- or zero-shot learning … could help achieve the `best of both worlds’.

Why do GPT-style models dominate today?

If the bidirectional T5-style denoising objective is superior to next-token prediction, why have unidirectional GPT-style models become the dominating style of large language models trained by researchers? It’s because of the popularity of GPT-3 and it being the first model to demonstate “in-context learning” through prompting.

Language models that predate GPT-3, like T5, did not exhibit strong few-shot learning capabilities and the emergent property of in-context learning. This discovery is in fact the title of the GPT-3 paper: “Language Models are Few-Shot Learners”. Once GPT-3 demonstrated this breakthrough emergent property, there was no turning back. Only language models compatible with prompting would be of interest to researchers even if other types of language models may have superior properties. And all evidence showed, unidirectional, GPT-style models, trained on next-token prediction, were the only type of language models that could achieve prompting.

Surprise: T5 can be prompted and outperforms GPT-style models.

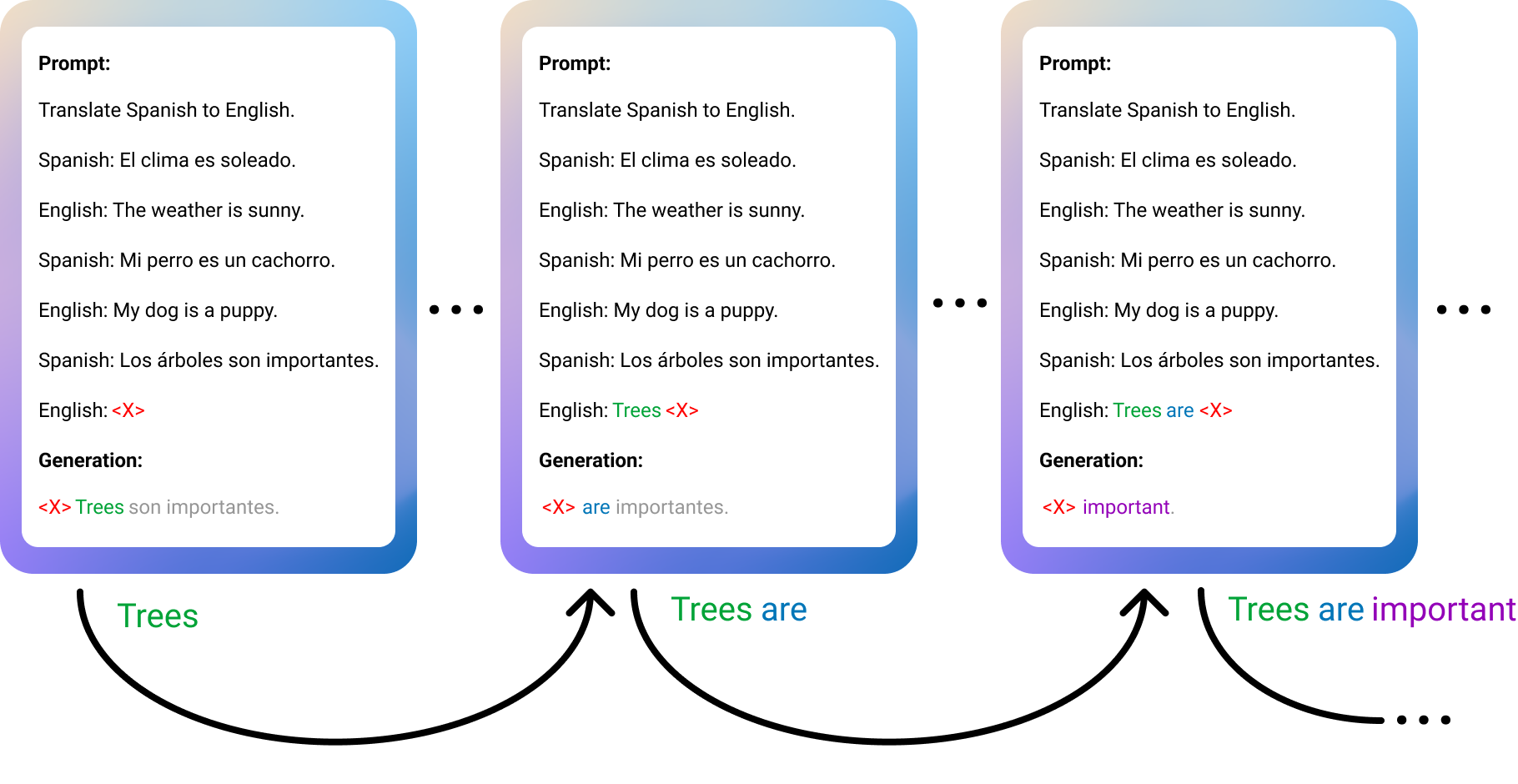

We find with an inference technique we call Sequential Autoregressive Prompting (SAP) we can prompt older language models like T5 and we find that they can even outperform their GPT-style counterparts while using 50% fewer parameters. This technique requires no fine-tuning and can be performed on a vanilla T5 model.

Conclusion: Is GPT the Wrong Architecture?

It’s worth noting that T5 was released well before GPT-3. While GPT-3 was the first model credited with having in-context learning abilities, our paper demonstrates T5 actually had hidden in-context learning abilities before GPT-3. So, is GPT the wrong architecture? It’s possible.

In an alternate reality, had this discovery been made earlier before GPT-3 won the race to be the first model to achieve in-context learning, it may have given researchers some pause before committing fully to GPT-style models. Today, our discovery should nudge researchers to re-evaluate whether other self-supervised training objectives and language model architectures may outperform GPT-style models, given our paper demonstrates other types of language models can also achieve strong prompting and in-context learning abilities for the first time.

Slides

For a recorded video walkthrough and slides, see the ICLR presentation page.

Paper and Citation

This paper was joint work with Bryan Li, Mohammad Rasooli, Noah Constant, Colin Raffel, Chris Callison-Burch.

You can find the paper here and cite the paper with:

@inproceedings{patel2022bidirectional,

title={Bidirectional Language Models Are Also Few-shot Learners},

author={Patel, Ajay and Li, Bryan and Rasooli, Mohammad Sadegh and Constant, Noah and Raffel, Colin and Callison-Burch, Chris},

booktitle={The Eleventh International Conference on Learning Representations},

year={2022}

}